Introduction

Due to the cost of LLMs APIs it is critical to enforce rate limit and granular access control on them. Further, concerns over sensitive data leakage is leading more and more companies to restricting or entirely banning access to LLMs.

In this blog post we are going to show how we can use SlashID to:

- Block or monitor requests to OpenAI APIs that contain sensitive sensitive data

- Enforce RBAC on OpenAI APIs and safely store the OpenAI API Key

In a future article, we’ll show how to enforce rate limiting and throttling.

OpenAI APIs

The OpenAI APIs allow applications to access models and other utilities that OpenAI exposes.

For instance, you can easily generate a chat completion with a call like the following:

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Say this is a test!"}],

"temperature": 0.7

}'Introducing Gate

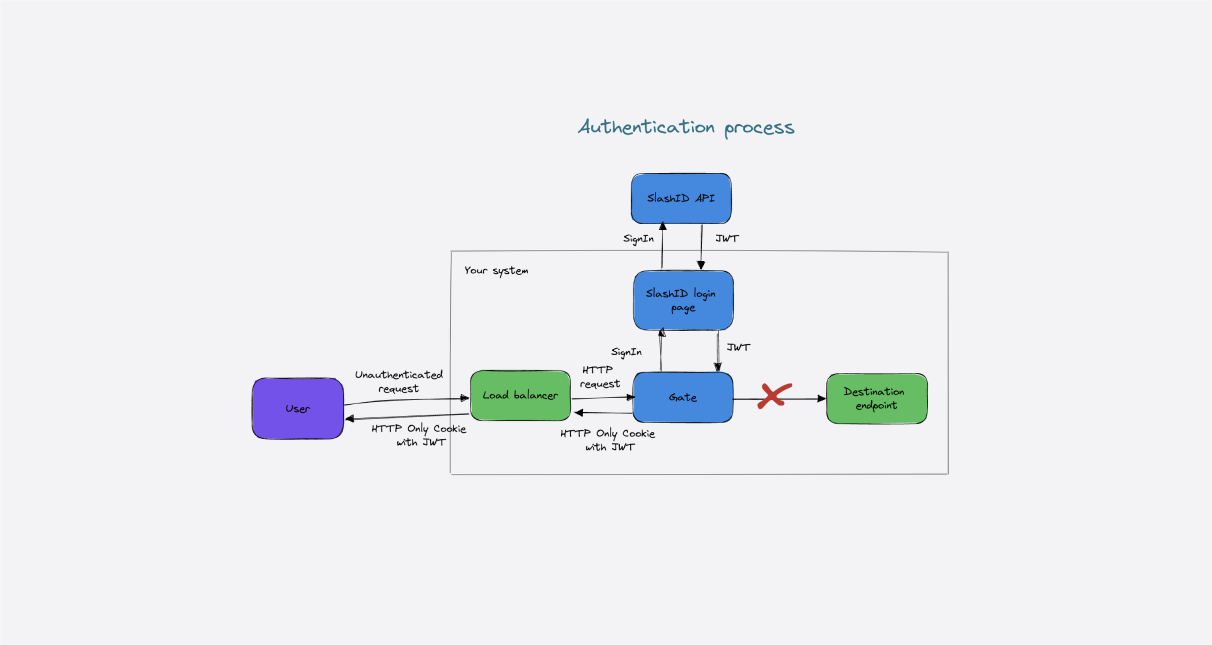

At SlashID, we believe that security begins with Identity. Gate is our identity-aware edge authorizer to protect APIs and workloads.

Gate can be used to monitor or enforce authentication, authorization and identity-based rate limiting on APIs and workloads, as well as to detect, anonymize, or block personally identifiable information (PII) and sensitive data exposed through your APIs or workloads.

Deploying Gate

In this blog post we’ll assume you deploy Gate as a standalone service and proxy OpenAI traffic through it. We are using docker compose for this exercise:

version: '3.7'

services:

gate:

image: slashid/gate

ports:

- 8080:8080

command: [--yaml, /gate.yaml]

volumes:

- ./gate.yaml:/gate.yamlIn the most basic form, let’s simply proxy a request to the OpenAI API and log it. This is what the gate.yaml configuration file looks like:

gate:

port: '8080'

log:

format: text

level: debug

urls:

- pattern: '*/v1/chat/completions*'

target: https://api.openai.com/curl https://gate:8080/v1/chat/completions

{

"error": {

"message": "You didn't provide an API key. You need to provide your API key in an Authorization header using Bearer auth (i.e. Authorization: Bearer YOUR_KEY), or as the password field (with blank username) if you're accessing the API from your browser and are prompted for a username and password. You can obtain an API key from https://platform.openai.com/account/api-keys.",

"type": "invalid_request_error",

"param": null,

"code": null

}

}Block requests containing PII data

We can use the Gate PII detection plugin together with the OPA plugin to block requests to OpenAI APIs containing PII data:

Note: In the examples below, we embed the OPA policies directly in the Gate configuration, but they can also be served through a bundle.

Please check out our documentation to learn more about the plugin.

gate:

plugins:

- id: pii_anonymizer

type: anonymizer

enabled: false

parameters:

anonymizers: |

DEFAULT:

type: keep

- id: authz_deny_pii

type: opa

enabled: false

intercept: request

parameters:

policy_decision_path: /authz/allow

policy: |

package authz

import future.keywords.if

default allow := false

key_found(obj, key) if { obj[key] }

allow if not key_found(input.request.http.headers, "X-Gate-Anonymize-1")

---

urls:

- pattern: '*/v1/chat/completions*'

target: https://api.openai.com/

plugins:

pii_anonymizer:

enabled: true

authz_deny_pii:

enabled: trueThe PII Detection plugin is able to modify, hash, strip or simply detect PII data in requests/responses. In this example it is configured to only monitor for the presence of PII in requests:

anonymizers: |

DEFAULT:

type: keepIf any PII is detected, the PII plugin adds a series of headers to the request/response, X-Gate-Anonymize-N, where N is the number of PII detected.

The authz_deny_pii instance of the OPA plugin enforces an OPA policy that blocks a request if the response contains a X-Gate-Anonymize-1.

In other words, it blocks the request if the PII detection plugin found at least one PII element in the request.

Let’s see an example in action:

curl -vL https://gate:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "email@email.com"}],

"temperature": 0.7

}'

...

POST /v1/chat/completions HTTP/1.1

> Host: gate:8080

> User-Agent: curl/7.87.0

> Accept: */*

> Content-Type: application/json

> Content-Length: 128

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 403 Forbidden

< Via: 1.0 gate

< Date: Thu, 14 Sep 2023 01:57:40 GMT

< Content-Length: 0Blocking other sensitive data

The PII detection plugin supports a number of PII selectors out of the box, but it is not limited to them. It is also possible to define custom categories of data for the plugin to detect.

In the example below we specify a pattern for data that either contains the word confidential or mentions Acme Corp:

analyzer_ad_hoc_recognizers: |

- name: Acme Corp regex

supported_language: en

supported_entity: ACME_SENSITIVE_DATA

patterns:

- name: acme_data

regex: "*Acme Corp*"

- name: deny_list

deny_list: "confidential"Once we have specified the pattern we can see it in action like we did in the previous section:

curl -vL https://gate:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "This data is confidential"}],

"temperature": 0.7

}'

...

POST /v1/chat/completions HTTP/1.1

> Host: gate:8080

> User-Agent: curl/7.87.0

> Accept: */*

> Content-Type: application/json

> Content-Length: 128

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 403 Forbidden

< Via: 1.0 gate

< Date: Thu, 14 Sep 2023 01:57:40 GMT

< Content-Length: 0Running in monitoring mode

In large, complex environments, it is not always possible or advisable to block requests. Gate allows to run a number of plugins in monitoring mode, where an alert is triggered but the requests are not modified.

The OPA plugin also supports monitoring mode by adding monitoring_mode: true in its parameters as shown below:

- id: authz_allow_if_authed_pii

type: opa

enabled: false

intercept: request

parameters:

<<: *slashid_config

monitoring_mode: true

policy_decision_path: /authz/allow

policy: |

package authz

import future.keywords.if

default allow := false

key_found(obj, key) if { obj[key] }

allow if not key_found(input.request.http.headers, "X-Gate-Anonymize-1")Let’s send a request with PII data:

curl -vL https://gate:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "email@email.com"}],

"temperature": 0.7

}'

{

"error": {

"message": "You didn't provide an API key. You need to provide your API key in an Authorization header using Bearer auth (i.e. Authorization: Bearer YOUR_KEY), or as the password field (with blank username) if you're accessing the API from your browser and are prompted for a username and password. You can obtain an API key from https://platform.openai.com/account/api-keys.",

"type": "invalid_request_error",

"param": null,

"code": null

}

}The request passes but Gate logs the policy violation:

gate-llm-demo-gate-1 | time=2023-09-14T01:57:40Z level=info msg=OPA decision: false decision_id=9f248489-4876-40ed-824e-17fb35306c3b decision_provenance={0.55.0 19fc439d01c8d667b128606390ad2cb9ded04b16-dirty 2023-09-02T15:18:29Z map[gate:{}]} plugin=opa req_path=/v1/chat/completions request_host=gate:8080 request_url=/v1/chat/completionsBlock requests from users with unauthorized roles

With Gate we can also block requests to the OpenAI APIs that are unauthenticated or come from users who don’t belong to a specific group. In this example we’ll perform two actions:

- Use the JWT token validator plugin to validate an authorization header minted by SlashID. We further check that the user belongs to the

openai_usersgroup - We use the token reminter plugin coupled with SlashID Data Vault storage module to forward the request to the OpenAI APIs swapping the SlashID Authorization header for the OpenAI API key

We first define a Python webhook to remint the token and swap the Authorization header:

class MintTokenRequest(BaseModel):

slashid_token: str = Field(

title="SlashID Token", example="eyJhbGciOiJIUzI1NiIsInR5cCI6Ik..."

)

person_id: str = Field(

title="SlashID Person ID", example="cc006198-ef43-42ac-9b5a-b52713569d0f"

)

handles: Optional[List[Handle]] = Field(title="Person's login handles")

class MintTokenResponse(BaseModel):

headers_to_set: Optional[Dict[str, str]]

cookies_to_add: Optional[Dict[str, str]]

def retrieve_openai_key(person_id):

# Construct the URL

url = f"https://api.slashid.com/persons/{person_id}/attributes/end_user_no_access"

# Perform the GET request

response = requests.get(url)

# Check if the request was successful

if response.status_code == 200:

data = response.json()

return data['result']['openai_apikey']

else:

print(f"Request failed with status code {response.status_code}.")

return None

@app.post(

"/remint_token",

tags=["gate"],

status_code=status.HTTP_201_CREATED,

summary="Endpoint called by Gate to translate SlashID token in a custom token",

)

def mint_token(request: MintTokenRequest) -> MintTokenResponse:

logger.info(f"/mint_token: request={request}")

sid_token = request.slashid_token

try:

token = jwt.decode(sid_token, options={"verify_signature": False})

except Exception as e:

raise HTTPException(

status_code=status.HTTP_401_UNAUTHORIZED,

detail=f"Token {sid_token} is invalid: {e}",

)

openai_token = retrieve_openai_key(token.get("person_id"))

if openai_token is None:

raise HTTPException(

status_code=status.HTTP_401_UNAUTHORIZED,

detail=f"Unable to get openai token",

)

return MintTokenResponse(

headers_to_set={"Authorization": "Bearer " + openai_token}, cookies_to_add=None

)The mint_token function decodes the JWT token with the SlashID user, retrieves one of its Data Vault buckets where the OpenAI API key is stored and rewrite the Authorization header with the OpenAI key.

Note: The reminter works without SlashID. You could for instance look up the OpenAI API Key from your instance of Hashicorp Vault or from another location

Let’s now take a look at the Gate configuration:

gate:

plugins:

- id: validator

type: validate-jwt

enable_http_caching: true

enabled: false

parameters:

required_groups: ['openai_users']

jwks_url: https://api.slashid.com/.well-known/jwks.json

jwks_refresh_interval: 15m

jwt_expected_issuer: https://api.slashid.com

- id: pii_anonymizer

type: anonymizer

enabled: true

parameters:

anonymizers: |

DEFAULT:

type: keep

- id: authz_deny_pii

type: opa

enabled: false

intercept: request

parameters:

policy_decision_path: /authz/allow

policy: |

package authz

import future.keywords.if

default allow := false

key_found(obj, key) if { obj[key] }

allow if not key_found(input.request.http.headers, "X-Gate-Anonymize-1")

- id: reminter

type: token-reminting

enabled: false

parameters:

remint_token_endpoint: http://backend:8000/remint_token

port: '8080'

log:

format: text

level: debug

urls:

- pattern: '*/v1/chat/completions*'

target: https://api.openai.com/

plugins:

validator:

enabled: true

pii_anonymizer:

enabled: true

authz_deny_pii:

enabled: true

reminter:

enabled: trueA lot of things are happening on the */v1/chat/completions* endpoint:

- We first validate the Authorization header, including checking that the user belongs to the correct group, through the

validatorinstance of the JWT Validator plugin. - We then proceed to check whether the request contains any PII data with

pii_anonymizer. - Next, we block the request through the OPA plugin

authz_deny_piiif any PII was detected. - Finally, if the request made it this far we use the

reminterto swap the SlashID authorization token for the OpenAI API key and we let the request through.

In particular, the JWT validator checks the validity of the JWT token as well as whether the user belongs to the openai_users group as shown below:

- id: validator

type: validate-jwt

enable_http_caching: true

enabled: false

parameters:

required_groups: ['openai_users']

jwks_url: https://api.slashid.com/.well-known/jwks.json

jwks_refresh_interval: 15m

jwt_expected_issuer: https://api.slashid.comLet’s see it in action:

curl -vL https://gate:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer abc"

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Say this is a test with SlashID!"}],

"temperature": 0.7

}'

{

"id": "chatcmpl-abc123",

"object": "chat.completion",

"created": 1677858242,

"model": "gpt-3.5-turbo-0613",

"usage": {

"prompt_tokens": 13,

"completion_tokens": 7,

"total_tokens": 20

},

"choices": [

{

"message": {

"role": "assistant",

"content": "\n\nThis is a test with SlashID!"

},

"finish_reason": "stop",

"index": 0

}

]

}Conclusion

In this brief blog post we’ve demonstrated a few of the things you can do to securely access LLMs without compromising on privacy and security. Stay tuned for the next blog post on rate limiting and OpenAI APIs.

We’d love to hear any feedback you may have! Try out Gate with a free account. If you’d like to use the PII detection plugin, please contact us at contact@slashid.dev!